Twitter Teen Porn - A Look At Platform Challenges

Online spaces, like the one formerly known as Twitter, are places where people connect, share thoughts, and keep up with what's happening. Yet, too it's almost a constant challenge to keep these digital gathering spots safe for everyone, especially younger individuals. The vast amount of material uploaded every second means that platform managers face a very real struggle to control everything that appears. This ongoing effort to maintain a secure environment touches upon many aspects of how these services operate, from who decides what stays up to how they handle the less desirable parts of human interaction online.

You know, that platform, now called X, has seen some significant shifts over the past couple of years. We've heard about how its value has changed quite a bit since its acquisition, and how a good number of advertisers have chosen to step back. This sort of movement, really, often happens when there are concerns about the kind of material found on the platform, or the overall tone it projects. It's a situation that speaks volumes about the delicate balance between open expression and ensuring a safe place for all who visit, especially when sensitive topics, like anything involving children, come into view.

In fact, the stories from users themselves paint a pretty clear picture. Some folks feel the platform's way of handling accounts and removing content can be, in a way, a bit frustrating. There are these ongoing skirmishes, it seems, where unwanted material appears, gets taken down, and then similar content pops up somewhere else, almost like a game of whack-a-mole. This constant back-and-forth illustrates the persistent effort needed to keep the platform clean and secure, a task that has direct implications for issues like the presence of inappropriate content, including matters related to twitter teen porn.

Table of Contents

- The Shifting Ground of Online Platforms

- What Happens When Content Goes Wrong on Twitter Teen Porn?

- Why Do Advertisers Leave Twitter Teen Porn Related Spaces?

- How Does Twitter Address Problematic Content and Twitter Teen Porn Issues?

- Who is Behind the Problematic Content Linked to Twitter Teen Porn?

- Understanding User Experience and Twitter Teen Porn Safety

- The Financial Side of Platform Safety and Twitter Teen Porn

- The Ongoing Efforts to Maintain a Safer Environment from Twitter Teen Porn

The Shifting Ground of Online Platforms

The online world, particularly places like Twitter, is constantly changing, isn't it? What was once a rapidly growing space for conversations has, apparently, seen its number of active accounts decrease over time. For example, Google once reported that the platform had around 368 million accounts in 2022, but that number has since moved down to about 250 million. This kind of movement in user numbers suggests a shift in how people view and use the service, and it's something that platform managers surely pay close attention to. Meanwhile, other platforms, like Threads, have quickly gained a significant user base, reaching 175 million users within just a year of their launch. This competitive atmosphere, you know, pushes all platforms to think about what makes people stay, and what makes them leave.

It's also worth noting that the platform's financial standing has changed quite a bit. It is said that its value today is more than 70% less than what it was when its current owner took over a couple of years ago. This decline, in some respects, is often linked to various factors, including how the platform is perceived by the public and, significantly, by those who pay to place their messages there. Advertisers, it seems, are quite particular about where their brand names appear, and they tend to avoid places that might have content or associations they find problematic. This is a crucial point for any online service trying to stay financially healthy.

What Happens When Content Goes Wrong on Twitter Teen Porn?

When you spend time on social media, you sometimes come across things that just don't sit right, don't you? There's talk, for instance, about "dumb shananigans" that seem to cause accounts to be shut down. Yet, as soon as one account is removed, new ones seem to appear, almost playing a game of "wackamole" with the platform's efforts to keep things tidy. This continuous cycle points to a pretty big challenge for those running the service: how do you effectively deal with a constant stream of unwanted material? It's a situation that, frankly, can make the platform feel a little bit messy for users and, very importantly, for those trying to ensure a safe environment for everyone.

Users, you see, sometimes express frustration with how the platform handles these situations. There are comments about the service being "kind of shit with the bans and snitching," which suggests that the methods used to remove content and accounts aren't always seen as effective or fair by the user community. This feeling of dissatisfaction can affect how people engage with the platform and their overall sense of security while using it. The constant appearance of inappropriate material, whatever its nature, creates a persistent task for the platform's safety teams, who are always working to manage the flow of information and keep things in order.

The Constant Battle Against Undesirable Twitter Teen Porn Content

The fight against unwanted content, particularly anything as sensitive as twitter teen porn, is a never-ending one for online platforms. You have these "amp accounts," for instance, which are sometimes shut down, and some people express surprise at this, given all the "garbage that gets posted on that platform." It's almost as if users feel there are bigger issues to tackle, yet specific types of accounts are targeted. This situation highlights the complex choices platforms must make about what content to prioritize for removal and how to go about it. The sheer volume of material means that decisions about what to take down, and when, are made constantly, and these choices can have a big impact on the user experience.

It's not just about removing things, though. It's about the effort required to keep up with those who are determined to post problematic material. The "wackamole" comparison is pretty accurate here, as it describes a situation where stopping one source of unwanted content often just leads to another popping up somewhere else. This persistent challenge means that platforms must always be developing new ways to identify and remove harmful content, a task that demands significant resources and ongoing vigilance. The goal, naturally, is to make the platform a place where people feel comfortable and secure, free from the worry of encountering distressing material.

Why Do Advertisers Leave Twitter Teen Porn Related Spaces?

Advertisers, it turns out, are quite particular about where their brand names show up. They have, apparently, chosen to step away from the platform in significant numbers, and the reason given is often a desire not to be linked with certain types of content. For example, it's been said that they didn't want to be associated with things described as "homophobic" or "antisemitic." This kind of decision by advertisers is a clear signal about the importance of a platform's overall content environment. When content that is widely considered offensive or harmful becomes prominent, those who pay to advertise there tend to get nervous about their brand's image, which is a very real concern for them.

The departure of these paying partners has, naturally, a big impact on the platform's financial health. When significant sources of income choose to go elsewhere, it creates a challenging situation for the company. This movement of advertisers isn't just about specific controversial statements; it's about the broader perception of the platform as a safe and reputable place for their messages. If the general feeling is that the platform struggles to control undesirable content, or if it becomes known for hosting material that many find unacceptable, then businesses will almost certainly reconsider their investment there. This means that maintaining a clean and respectable content environment is not just about user safety, but also about the platform's ability to earn money and stay in business.

The Impact of Content on Brand Partnerships and Twitter Teen Porn Concerns

The kind of material that appears on a platform directly affects its ability to attract and keep brand partnerships, especially when it comes to sensitive topics like twitter teen porn. When advertisers see content that could damage their brand's reputation, they simply pull back. This is a straightforward business decision, really. They invest money to reach potential customers, and they want to make sure their message is seen in a positive light, not next to something that could cause public outcry or negative associations. So, if a platform struggles with, say, "garbage" content or has issues with moderation, it creates a clear risk for any company looking to promote itself there.

The consequences of losing advertisers are pretty significant for any online service. Less advertising income means fewer resources for development, maintenance, and, crucially, for content moderation efforts. It's a bit of a cycle, isn't it? If the platform can't keep its content clean, it loses advertisers. If it loses advertisers, it has less money to invest in keeping its content clean. This dynamic underscores just how vital it is for platforms to have robust systems in place to manage and remove problematic material, not just for the well-being of their users, but for their own long-term survival. The financial health of the platform and its commitment to safety are, in some respects, two sides of the same coin.

How Does Twitter Address Problematic Content and Twitter Teen Porn Issues?

The platform has, in a way, taken some steps to address the issues that arise from problematic content. For instance, we know that Twitter, or X as it's now called, has previously prevented accounts from advertising on its service. This is a form of consequence for those who might violate its rules. Additionally, there's a notable instance where the platform chose to donate a substantial amount of money – $1.9 million that a particular organization had spent globally on advertising – to academic research. This money went towards studies related to elections and other initiatives, suggesting an effort to support broader societal good, perhaps as a way to offset negative perceptions or to contribute to solutions for online challenges.

However, the general feeling among some users is that the platform's approach to moderation isn't always smooth. There are mentions of the service being "kind of shit with the bans and snitching," which implies a level of frustration with how account removals are handled and how reports of bad content are processed. This feedback from users is, actually, important because it points to areas where the platform might improve its systems for identifying and dealing with inappropriate material. The goal, naturally, is to make these processes more effective and perhaps more transparent, so that users feel their concerns are being heard and acted upon.

The Struggle with Moderation and Preventing Twitter Teen Porn Material

Preventing the spread of sensitive material, like anything related to twitter teen porn, is a constant struggle for online platforms. The very nature of user-generated content means that new, unwanted material can appear at any moment. This is why you hear about "dumb shananigans" leading to accounts being shut down, only for new ones to pop up quickly, playing a game of "wackamole." This situation illustrates the sheer difficulty of keeping up with bad actors who are always finding new ways to circumvent rules and detection methods. It's not a simple task, and it requires continuous effort and adaptation from the platform's side.

The process of moderating content involves not just removing what's clearly against the rules, but also dealing with reports from users. When people feel that "snitching" or reporting content doesn't lead to quick or effective action, it can discourage them from participating in the safety efforts. This means the platform needs to ensure that its reporting mechanisms are robust and that actions are taken in a timely manner. The presence of "garbage that gets posted on that platform," as one comment suggests, indicates that there's always more work to be done in refining the moderation process to keep the platform as safe as possible for all its visitors, especially those who are more vulnerable.

Who is Behind the Problematic Content Linked to Twitter Teen Porn?

When it comes to problematic content, there's often a sense that it doesn't just appear out of nowhere. Sometimes, you see hints that suggest organized efforts are at play. For example, some observations point to certain images being run by "the same people as soapland and real 21." This kind of statement, in a way, suggests that there might be networks or groups involved in creating and distributing certain types of material across different online spaces. It implies a more coordinated approach than just individual users posting random things, which is a very important distinction when trying to combat such issues.

The idea that these operations might lack "the talent for that" could suggest that while they are organized, their methods might not be particularly sophisticated, or perhaps their content is of a lower quality. However, even if the content itself isn't professionally produced, the fact that it's being distributed by what appears to be a connected group makes it a more persistent challenge for platforms. Identifying these networks and understanding their methods is a crucial step in effectively shutting down the sources of unwanted material. It's a task that goes beyond just removing individual posts and requires a deeper investigation into the origins of the content.

Identifying the Sources of Concerning Twitter Teen Porn Images

Pinpointing the origins of concerning images, especially those related to twitter teen porn, is a complex undertaking for any online service. The mention of "these pics that most sites use are from a few formats" could imply that there are common patterns or characteristics in how these images are created or shared. This kind of information, you know, can be really helpful for those trying to develop tools to automatically detect and remove such material. If there are specific formats or technical details that link these images, it provides a valuable clue for digital investigators and content safety teams.

The suggestion that certain images are managed by the "same people" as other known problematic sites points to a need for platforms to collaborate and share information about bad actors. If these individuals or groups are operating across multiple platforms, then a coordinated effort among different services could be much more effective than individual attempts to shut them down. It highlights that the fight against harmful content isn't just about what happens on one specific platform, but about understanding the broader landscape of online misconduct and how different entities are connected. This kind of intelligence is, actually, vital for getting ahead of the problem rather than just reacting to it.

Understanding User Experience and Twitter Teen Porn Safety

For many people, the platform is a place to "keep up to date with friends" and generally follow "what's happening." This desire for connection and information is a core part of the user experience. People upload personal images, like "profile photo this is a personal image uploaded to your twitter profile," and they are encouraged to "make sure this is a photo of you that is recognisable." This emphasis on real identity and personal connection underscores the platform's role as a social space. However, when issues like problematic content arise, it can really affect how safe and comfortable users feel in this environment. The presence of undesirable material can make people hesitant to share personal details or to engage freely, which ultimately changes the very nature of the social experience the platform aims to provide.

It's interesting, too, that despite some of these challenges, a good number of people still believe the platform is a worthwhile place. Surveys, like those from Mintel in 2016 and Twitter insiders in 2017, showed that over half of participants agreed it was a good spot, with percentages ranging from 51% to 58%. This suggests that even with the "dumb shananigans" and moderation frustrations, there's still a perceived value in being on the platform. However, this positive sentiment can quickly erode if safety concerns become too prominent. The balance between allowing free expression and ensuring a secure environment for all users, especially young people, is a continuous tightrope walk for the platform. Maintaining trust is, very, absolutely key for keeping users engaged and feeling secure.

The Financial Side of Platform Safety and Twitter Teen Porn

The financial well-being of an online platform is, in some respects, deeply connected to its content safety practices. We've seen that the value of the platform has dropped significantly, by more than 70%, since its current owner acquired it a couple of years ago. This kind of decline isn't just about market trends; it's often a reflection of how the platform is perceived, particularly by those who bring in the money: the advertisers. When "fleeing advertisers evidently didn’t want to be associated with his homophobic, antisemetic" content, it highlights a broader concern about the types of material present on the service. This principle extends to all forms of undesirable content, including anything related to twitter teen porn.

When advertisers pull out, it creates a serious financial strain. Less advertising income means fewer resources are available for all sorts of operations, including the crucial work of content moderation and safety. It's a cycle that can be quite difficult to break. If a platform can't assure brands that their advertisements won't appear next to harmful or controversial material, then those brands will simply take their business elsewhere. This puts immense pressure on the platform to invest heavily in its safety infrastructure, not just because it's the right thing to do for users, but because it's essential for its own survival. The economic health of the platform is, in fact, directly tied to its ability to create and maintain a clean and trustworthy environment for its users and its business partners.

The Ongoing Efforts to Maintain a Safer Environment from Twitter Teen Porn

The task of keeping an online platform safe, particularly from issues as serious as twitter teen porn, is a continuous and evolving one. We've seen how the platform has, for example, barred certain entities from advertising and even donated significant funds to academic research related to elections and other initiatives. These actions, in a way, show attempts to address broader societal concerns and perhaps to improve the platform's image. However, the comments from users about "dumb shananigans" and the "wackamole" game with new accounts popping up indicate that the challenge of controlling unwanted content is far from over. It's a persistent battle that requires constant vigilance and adaptation.

The frustration expressed by some users regarding "the bans and snitching" and the surprise at how certain "amp accounts"

- Bill Orielly Twitter

- Special Kherson Cat Twitter

- Harrison Wind Twitter

- Twitter Adin Ross

- Emily Schrader Twitter

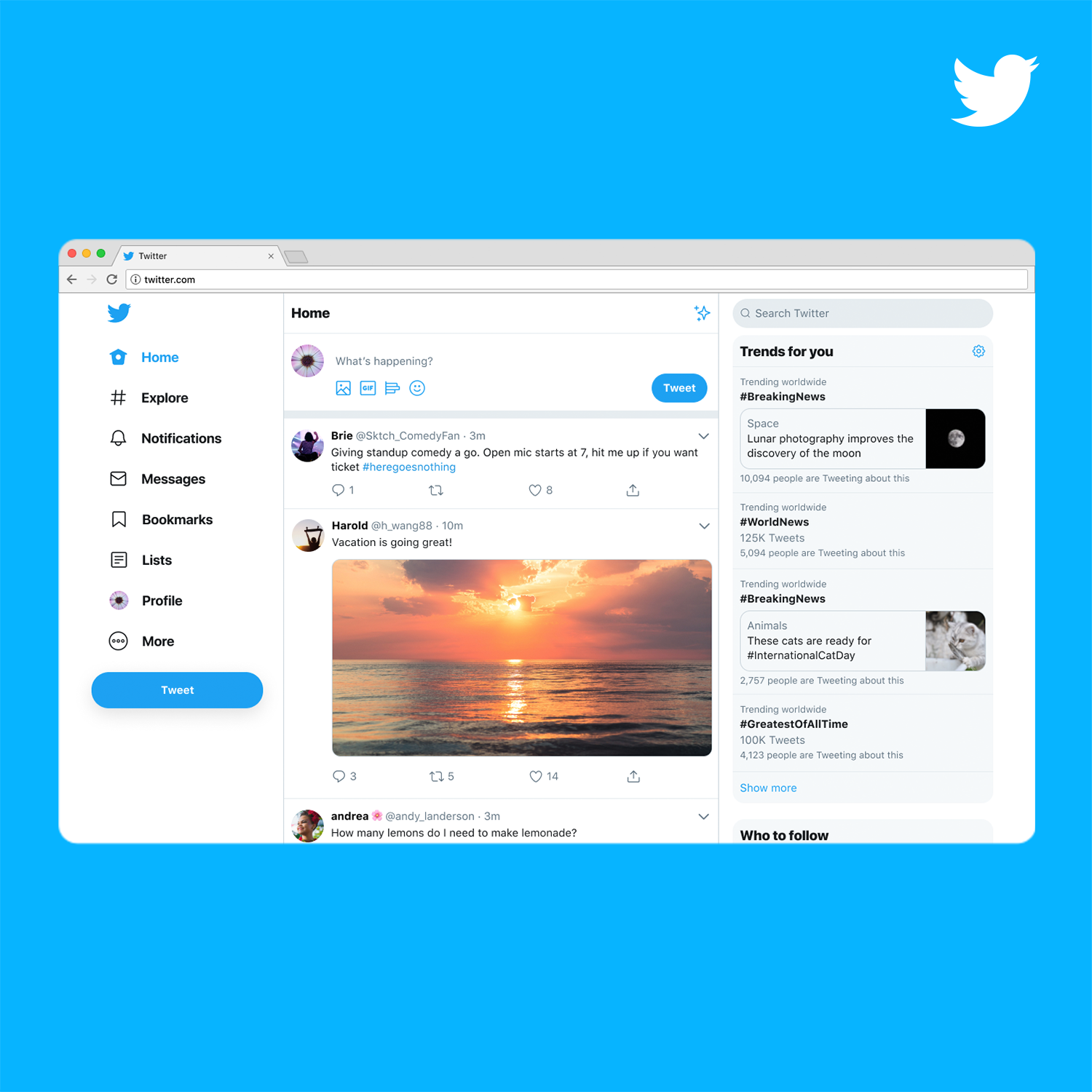

Introducing a new Twitter.com

Twitter to Develop a Decentralized Social Media Platform

Twitter Turns 17: A Look Back at the Evolution of the Social Media Platform