Twitter Gay Abuse Sex - Addressing Platform Content Issues

There are many conversations about what happens on social media platforms, and Twitter, which is now known by another name, is certainly a place where people talk about a lot of things. It’s a space where different voices come together, sometimes in ways that are helpful, and sometimes in ways that cause worry. When we think about how people connect and share, it's also important to consider the difficult aspects, like instances of abuse or the presence of sensitive content, which can certainly make things feel a bit unsettling for some users.

This platform, you know, has always been a hub for immediate news and keeping up with friends, which is actually a very big part of why many people use it. People tend to go there to see what’s happening in the world right now, to follow conversations, and to just generally stay connected with others. Yet, with all that openness, there are also challenges that come along with it, especially when we consider the broad range of topics discussed, some of which touch on very personal or even upsetting experiences, like those involving gay abuse or sex-related content.

So, we find ourselves looking at how a platform meant for public conversation also has to deal with the tricky parts of human interaction. This means looking at how content is managed, how people are kept safe, and what happens when things go wrong. It’s a bit of a balancing act, trying to support open talk while also working to keep the platform from becoming a place where harm can occur, particularly in discussions around topics like gay abuse and how sex-related content is handled, which can, in some respects, be quite sensitive.

- Court Logan Porn Twitter

- Leaked Tiktokers Twitter

- Twitter Aye Verb

- Hbomberguy Twitter

- %C3%A5%C3%A6%C3%A5 %C3%A9%C3%A4%C2%BA%C2%BA

Table of Contents

- Platform Changes and User Trust

- How Does Content Moderation Handle Gay Abuse?

- The Role of Advertisers and Platform Values

- What About Sex-Related Content on Twitter?

- User Identity and Safety on the Platform

- Why Do Accounts Keep Appearing Despite Bans and Twitter Gay Abuse Concerns?

- Monetary Contributions to Important Research

- Future Outlook for Platform Integrity

Platform Changes and User Trust

The platform has seen some significant shifts, especially over the past couple of years, which have, in a way, made many people think about its overall direction. There was a time, not too long ago, when the company's value was considerably higher, but now, it’s actually less than when it was acquired. This change in value, you know, often reflects how people, including businesses that advertise, feel about the platform's stability and its future. It’s a bit like a big ship trying to steer through choppy waters, and everyone is watching to see where it goes next, especially with all the talk about content, including sensitive topics like twitter gay abuse and sex-related discussions.

When businesses decide where to put their advertising money, they really consider the kind of environment they want their brand to be seen in. If they feel that the platform is becoming associated with certain kinds of content, like homophobic statements or anything that promotes prejudice, they might just decide to pull their ads. This is a very real concern, as apparently, some advertisers have already chosen to step away, partly because they didn't want their products linked to viewpoints that could be seen as hateful. It's a pretty clear signal, in some respects, about the kind of atmosphere a platform creates.

So, the feeling among many users and businesses is that the platform's ability to maintain a welcoming and safe space for everyone has become a more prominent discussion point. It’s about trust, really. People want to feel that when they use the platform, they won't encounter content that is deeply offensive or harmful. This includes making sure that there are clear boundaries around what is acceptable, especially concerning matters of respect and safety for all communities, which, you know, is a big part of why some folks are worried about twitter gay abuse and other forms of harassment.

How Does Content Moderation Handle Gay Abuse?

There's been a lot of talk about how the platform manages its content, and some users have expressed frustration with the way bans are handled and how reporting works. It seems, to some, that the system for dealing with problematic content, like instances of twitter gay abuse, can feel a bit inconsistent or, you know, just not as effective as they would hope. When people report something they see as harmful, they naturally expect the platform to act swiftly and fairly, but that doesn't always seem to be the case, which can be quite disheartening for those affected.

The process of trying to remove accounts that spread hateful messages or engage in abusive behavior can sometimes feel like a never-ending task. It’s almost like a game of whack-a-mole, where one account is taken down, and then another one pops right back up, often with a similar purpose. This ongoing challenge makes it very difficult to truly get a handle on persistent issues, including those that specifically target individuals or groups with abuse. It highlights the sheer scale of the problem and how much effort is needed to keep the platform a safer place, especially when addressing issues like twitter gay abuse.

So, what does this mean for people who experience or witness abuse? It means that sometimes, despite their best efforts to report it, they might still see that content or those accounts remaining active, or quickly returning. This can lead to a feeling of helplessness and a loss of faith in the platform's ability to protect its users. It’s a situation that really calls for a closer look at how these systems are designed and if they are truly equipped to deal with the speed and volume of harmful content that can appear, particularly when it relates to sensitive issues such as twitter gay abuse.

The Role of Advertisers and Platform Values

When we talk about what advertisers want from a social media platform, it's pretty clear that they look for a place that aligns with their brand's values. They simply do not want their advertisements appearing next to content that promotes prejudice, especially homophobia or antisemitism. This is a very significant point, as the financial health of the platform relies quite heavily on these advertising partnerships. If businesses feel uncomfortable with the general atmosphere or specific content, they will, quite naturally, take their money elsewhere, which has apparently happened already.

This decision by advertisers to step back is a direct message about the kind of content that can be found on the platform. It suggests that some of the discussions or materials present are not just upsetting to users, but also unacceptable to major companies. It’s a very practical consequence of how a platform is managed and what kind of content it allows to stay up. This, you know, directly influences the platform's ability to invest in things like improved moderation or new features, which could, in some respects, help address concerns around twitter gay abuse and other forms of harmful content.

So, in essence, the choices made by the platform's leadership about content moderation and what is permitted directly impact its financial well-being. A platform that is perceived as a safe and inclusive space is much more appealing to advertisers than one that struggles with issues of hate speech or abuse. It's a fundamental connection between the platform's values, its content policies, and its overall business model. This relationship really highlights the importance of maintaining a positive environment for all users, particularly when considering the presence of sensitive topics like twitter gay abuse and sex-related discussions.

What About Sex-Related Content on Twitter?

The presence of various types of content, including that which is sex-related, is a reality on many social media platforms, and Twitter is no exception. It’s a topic that comes up quite often, with some people noting that certain kinds of work, like escort services, often use these platforms to connect. This isn't just limited to Twitter; other sites and apps also see similar activity. It just goes to show, in a way, how these digital spaces are used for a wide range of purposes, some of which are quite different from what might be initially expected.

When we talk about sex-related content, it brings up questions about platform policies, especially regarding what is allowed and what crosses a line into abuse or exploitation. The challenge for any platform is to differentiate between consensual adult content and material that could be harmful, illegal, or exploitative. This is a very delicate balance, and it’s something that platforms are constantly trying to manage. It requires clear rules and consistent enforcement, which, you know, can be a bit tricky to get right given the sheer volume of content.

So, the conversation around sex-related content on the platform often intersects with broader discussions about safety, consent, and the potential for abuse. It’s about ensuring that if such content is present, it adheres strictly to legal and ethical guidelines, and that mechanisms are in place to prevent and address any instances of exploitation or harm. This is a crucial part of maintaining a responsible platform, and it ties into the wider goal of protecting users from any form of abuse, including what might be referred to as twitter gay abuse, or any other type of mistreatment.

User Identity and Safety on the Platform

A big part of using a social media platform involves creating a personal profile, and for many, that includes uploading a photo that helps people recognize them. This visual identity is quite important for connecting with friends and being seen as a real person. However, it also brings up questions about safety and how easily someone can be identified or, you know, potentially targeted. Making sure your profile picture is something that truly represents you, and that you are comfortable with its public nature, is a small but important step in managing your online presence.

The ease with which new accounts can be created, even after others have been banned, presents a continuous challenge for platform safety. It’s like a constant battle against those who wish to disrupt or harm others. This ability to quickly generate new profiles means that individuals engaging in problematic behavior, including those involved in what might be called twitter gay abuse, can often find ways to return to the platform, making it harder to ensure a consistently safe environment for everyone. This persistence, you know, is a very real issue for moderation teams.

This situation also raises concerns about how platforms verify identity and if current methods are strong enough to prevent the rapid re-emergence of accounts that have been removed for violating rules. It’s a very complex problem, trying to balance user privacy with the need to enforce community guidelines effectively. The constant appearance of new accounts that seem to play a game of cat and mouse with the platform’s rules makes it, in some respects, quite difficult to maintain a truly secure and respectful online space for all users.

Why Do Accounts Keep Appearing Despite Bans and Twitter Gay Abuse Concerns?

It's a question many users ask: why do accounts that cause trouble, or engage in things like twitter gay abuse, seem to pop back up even after they've been removed? The answer, you know, is multi-faceted. One reason is the sheer ease of creating new accounts. It doesn't take much effort to sign up again, perhaps with a different email address or a slightly altered profile. This low barrier to entry means that individuals who are determined to continue their disruptive behavior can often do so with relative ease, making it a continuous challenge for the platform's enforcement teams.

Another factor is the technical side of identifying and linking these new accounts to previously banned ones. It’s a bit like trying to catch smoke; people can use various methods to hide their true identity or location, making it harder for the platform to recognize them as repeat offenders. This constant game of hide-and-seek means that even with sophisticated tools, completely preventing the return of problematic users is a very difficult task. It truly requires ongoing vigilance and adaptation from the platform's side.

So, while the platform does have rules and mechanisms for banning accounts, the reality is that the internet offers many ways for determined individuals to circumvent these measures. This persistence of problematic accounts, including those associated with twitter gay abuse, can understandably lead to frustration among users who are trying to foster a more positive online community. It highlights the ongoing struggle between platform governance and the ingenuity of those who seek to bypass its controls, which, in some respects, is a very hard problem to solve completely.

Monetary Contributions to Important Research

Interestingly, the platform has made a notable decision regarding some funds it received from a particular advertiser, which was later barred from advertising. The platform is donating a significant sum, specifically $1.9 million that was spent globally on advertising by RT, to support academic research. This money is going towards initiatives related to elections and other important areas. It’s a pretty substantial contribution, and it suggests a commitment to using funds for public good, especially in areas that impact society broadly.

This kind of donation, you know, can be seen as a way for the platform to contribute to broader societal discussions and research, particularly when it comes to things like election integrity. By funding academic studies, the platform is, in a way, supporting efforts to understand complex issues and perhaps find solutions that benefit everyone. It’s a different kind of impact than just managing content directly, but it certainly shows a willingness to engage with important topics on a larger scale.

So, this move to direct advertising revenue towards research initiatives is a distinct approach to corporate responsibility. It’s a way of turning what might have been a problematic association into something that could potentially yield valuable insights and support for democratic processes. It highlights that platforms can, in some respects, use their resources to address challenges beyond their immediate operational boundaries, contributing to a better public understanding of how information and influence work, which is, you know, a very important thing.

Future Outlook for Platform Integrity

Looking ahead, the platform faces ongoing challenges in maintaining its integrity and ensuring a safe environment for all its users. The issues around content moderation, particularly concerning sensitive topics like twitter gay abuse and sex-related content, are not going to disappear overnight. It requires a continuous effort to refine policies, improve enforcement mechanisms, and adapt to new ways that problematic content or behavior emerges. It’s a constant process of learning and adjusting, which, you know, is pretty typical for any large online community.

The perception of the platform among its users and advertisers will continue to be shaped by how effectively these challenges are addressed. If users feel heard and protected, and if advertisers feel confident that their brands are in a responsible environment, then the platform can certainly build back trust. It’s about demonstrating a clear commitment to user safety and responsible content management, which, in some respects, is the bedrock of any successful social media platform in the long run.

So, the path forward involves a dedication to fostering a more positive and secure online space. This means not just reacting to problems as they arise, but also proactively working to prevent them. It’s a big undertaking, and it involves listening to user feedback, collaborating with experts, and making tough decisions about what is acceptable. The goal, ultimately, is to create a place where people can connect and share freely, without having to worry about encountering harmful content or experiencing abuse, which is, you know, a really important aim for everyone involved.

- Tristen Snell Twitter

- Wu Tang Is For The Children Twitter

- Shannon Drayer Twitter

- Sam Mckewon Twitter

- Tcr Twitter

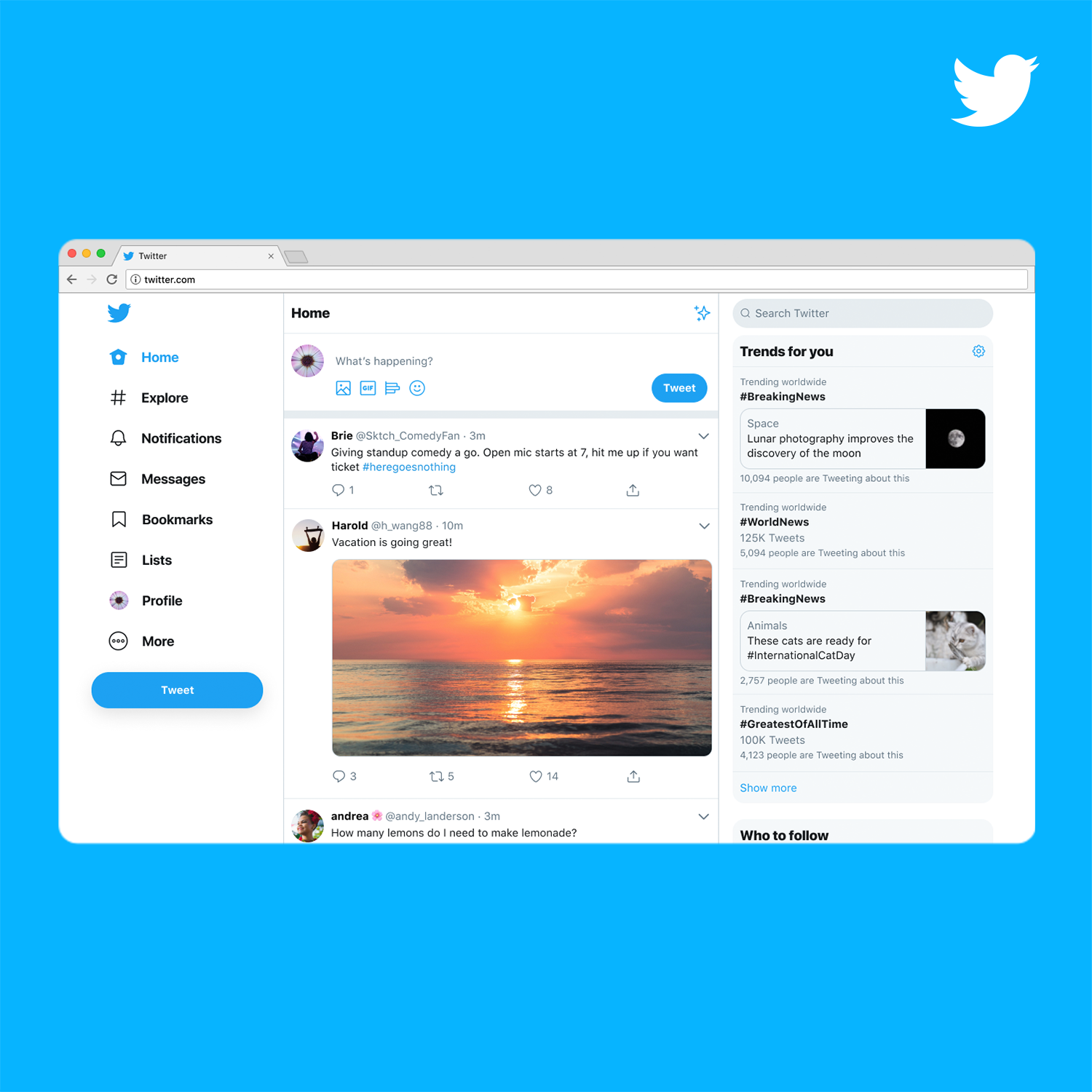

Introducing a new Twitter.com

Twitter to Develop a Decentralized Social Media Platform

Twitter Turns 17: A Look Back at the Evolution of the Social Media Platform