Twitter Hitler - Unpacking Platform Challenges And User Experience

There are moments when a platform's reputation, you know, faces some really tough tests, and that, is that, truly puts its values and how it operates under a magnifying glass. When certain phrases or topics emerge in public discourse, like the one we're looking at today, they often bring with them a whole host of questions about content, community, and the general direction of a social gathering place online. It's about more than just words; it's about the bigger picture of what a digital space means to its many participants.

A recent situation, it seems, involved a platform making a significant decision, like when it stopped certain advertisements from appearing on its pages. This sort of action, too, can sometimes signal a shift in how a platform wants to be perceived or what kind of content it is willing to host. It's a move that often comes with its own set of reasons, usually tied to upholding certain standards or responding to public feedback about what is, or is not, acceptable within its digital boundaries.

In fact, this particular instance went a bit further, with the platform redirecting a substantial sum of money, specifically $1.9 million that a particular entity had spent globally on promotional efforts. This amount, it appears, is now being given over to support academic investigations into election processes and other related initiatives. This kind of gesture, you know, suggests a commitment to broader societal issues, aiming to turn a past commercial relationship into something that could benefit public understanding and community projects.

- Wiener Circle Twitter

- Sam Mckewon Twitter

- Gay Edging Twitter

- Turtle Boy Twitter

- Fidan Atalay If%C3%A5%C3%BFa

Table of Contents

- How Does Content Policy Shape the User Environment?

- What Happens When Advertisers Steer Clear of "Twitter Hitler" Related Content?

- Understanding the User's Viewpoint on "Twitter Hitler" and Platform Management

- How Do Profile Pictures and Digital Identity Play a Part?

- The Platform's Financial Standing and "Twitter Hitler" Concerns

- Staying Informed and the Challenge of "Twitter Hitler" Content

- Connecting and Discovering on the Platform

- Technical Aspects and "Twitter Hitler" Content Display

How Does Content Policy Shape the User Environment?

The rules a platform sets for what people can say and share, is that, really do shape the feel of the whole place. We hear, for instance, about instances where the platform put a stop to a certain group from putting out ads on its pages. This kind of action, you know, speaks volumes about what the platform deems acceptable for public viewing and what it considers to be crossing a line. It is, in some respects, a very direct way of managing the overall atmosphere and the kinds of conversations that happen there. When a platform decides to bar someone from advertising, it is, basically, sending a clear message about the types of associations it is willing to have and the messages it wants to avoid amplifying. This decision, too, can have ripple effects, influencing how other organizations view the platform and whether they feel it aligns with their own values. It's a constant balancing act, trying to allow for open expression while also keeping the space safe and welcoming for its vast number of users. The ways these policies are applied, actually, can really influence the daily experience for everyone involved, from individual people sharing thoughts to larger groups trying to get their ideas out there. The idea of content governance, you know, is a really big one, and it is always being talked about and adjusted as new situations come up. This particular instance, with the advertising ban, just goes to show how seriously the platform takes its role in curating the environment for its community. It's not just about what is said, but also about who is saying it and what kind of support that voice receives from the platform itself. So, in a way, these policies are the invisible threads that hold the whole digital fabric together, ensuring a certain level of decorum and safety for its inhabitants.

What Happens When Advertisers Steer Clear of "Twitter Hitler" Related Content?

When advertisers, you know, decide they don't want their brand appearing next to certain kinds of talk or imagery, it can have a pretty big impact on a platform's finances. We saw an example of this, apparently, where advertisers were leaving because they didn't want to be linked with what was described as "homophobic" content. This kind of situation, too, often comes up when discussions or materials that bring up "twitter hitler" related concerns start to become prominent. Companies that spend money on ads are very careful about their public image, and they really don't want their products or services to be seen alongside anything that might be offensive or controversial to their customers. It's a matter of brand safety, basically. If a platform is perceived as having a lot of content that is, shall we say, less than ideal, then businesses will naturally start to pull back their spending. This can create a bit of a challenge for the platform, as advertising revenue is, quite often, a very important part of how it keeps running. The phrase "fleeing advertisers" paints a pretty clear picture of how quickly money can disappear when these kinds of issues surface. It means the platform has to work extra hard to show that it is a safe and appropriate place for brands to present themselves. This can involve tightening up content rules, improving moderation efforts, and making very public statements about its commitment to a positive environment. So, in some respects, the decisions of advertisers act as a kind of barometer, indicating the general health and perceived safety of the platform's content ecosystem. When the environment is seen as problematic, it is, naturally, going to affect the bottom line. This connection between content and commercial interest is a really powerful one, and it means platforms are always, more or less, walking a fine line when it comes to managing what is shared on their pages.

Understanding the User's Viewpoint on "Twitter Hitler" and Platform Management

People who use the platform, you know, have some very strong feelings about how it is managed, especially when it comes to things like bans and how information is reported. There is a sense, apparently, that the platform is "kind of shit with the bans and snitching." This particular phrase, while quite direct, really captures a feeling of frustration that some users experience when their accounts, or the accounts of people they follow, are suspended. It also touches on the idea of "snitching," which suggests a distrust of the reporting mechanisms or how those reports are handled. When people are discussing issues that might, in some way, involve "twitter hitler" or similar sensitive topics, the way bans are enacted becomes a really big deal. Users want to feel that the rules are applied fairly and that there's a clear process for appeals or for understanding why an action was taken. The perception of arbitrary or unfair bans can lead to a lot of dissatisfaction and a feeling that the platform is not listening to its community. It's almost as if some users see the platform's moderation efforts as a game of "whack-a-mole," where new accounts pop up as old ones are taken down, making it seem like a never-ending struggle against what some might call "dumb shenanigans." This sort of language, you know, highlights a very real tension between the platform's need to maintain order and the users' desire for freedom of expression. It's a constant push and pull, with users often feeling that the platform's efforts to control content can sometimes be overzealous or misdirected. This user perspective is, arguably, crucial for the platform to consider, as it directly impacts how people feel about using the service and whether they see it as a welcoming or restrictive place for their online interactions. The feelings around bans and reporting, basically, are a very important part of the overall user experience.

- Katiana Kay X

- Chennedy Carter Twitter

- Hbomberguy Twitter

- Ajay Deluxe Chappell Roan

- Myers Punannieannie Bbyanni

How Do Profile Pictures and Digital Identity Play a Part?

Your profile picture, you know, is a very important part of how you present yourself online. It's a personal image, basically, that you put up on your profile, and the general idea is that it should be a photo of you that people can recognize. This seemingly simple detail, too, plays a much bigger role in how people interact on the platform. When people are discussing various topics, even sensitive ones that might touch upon "twitter hitler" type issues, seeing a recognizable face can, in a way, add a layer of authenticity to the conversation. It helps to build trust and makes the digital space feel a little more human. Without a clear image, it can be harder to connect with others or to feel like you are talking to a real person. This is why the platform, it seems, puts emphasis on having a photo that is, genuinely, of you and that allows others to know who they are engaging with. It's about establishing a sense of identity and presence in a world that can sometimes feel very anonymous. The way these images are handled, and the formats they come in, is also something that the platform has to manage, as "these pics that most sites use are from a few formats." This means ensuring that whatever image you choose can be displayed properly across different devices and viewing experiences. So, in some respects, your profile picture is like your digital handshake, a first impression that helps to shape how others perceive your contributions to the ongoing discussions, whatever they may be.

The Platform's Financial Standing and "Twitter Hitler" Concerns

The financial health of a platform, you know, can be influenced by many different things, and public perception, especially concerning controversial content, is definitely one of them. There's been talk, for instance, that the platform is now worth "more than 70% less today than when Musk bought it only two years ago." This kind of significant drop in value, too, is often linked to a combination of factors, but the departure of advertisers, as we discussed earlier, plays a very big part. When content that might be seen as problematic, like discussions that bring up "twitter hitler" concerns, becomes more prevalent or is perceived as being inadequately managed, it creates a less appealing environment for businesses. These businesses, quite naturally, don't want their brand associated with anything that could cause a public outcry or damage their reputation. So, the decline in value is, in some respects, a direct reflection of these commercial decisions. It's a clear signal from the market that the platform's ability to attract and retain advertising revenue has been significantly impacted. This financial aspect is, basically, a very critical measure of a platform's overall success and its long-term viability. When a platform experiences such a substantial reduction in its perceived worth, it typically indicates that there are some very real challenges that need to be addressed, whether they relate to content moderation, user engagement, or broader market conditions. The economic impact, in other words, is a very tangible consequence of how the platform is seen and used by both its community and its commercial partners.

Staying Informed and the Challenge of "Twitter Hitler" Content

One of the main reasons people use the platform, you know, is to "keep up to date with" what's happening in the world and with the people they care about. It's a place where you can "search twitter for people, topics, and hashtags you care about" and "explore trending topics." This ability to stay informed and connected is, basically, a core part of the platform's appeal. However, this very openness also presents a challenge, especially when it comes to managing the sheer volume and variety of content, including discussions that might touch upon "twitter hitler" or other difficult subjects. The platform is constantly asking, in a way, "Twitter @twitter following what's happening?" This suggests an ongoing effort to monitor the vast stream of information that flows across its network. While many people find it to be a good source of information—"Over half of people agree that twitter is a good place to" with percentages like 58% and 56% cited from sources like Mintel and Twitter Insiders—the presence of controversial content can complicate this. The platform has to balance its role as a real-time information hub with its responsibility to manage harmful or problematic content. This means constantly refining its tools and approaches to ensure that users can find what they are looking for without being exposed to unwanted material. So, in some respects, the platform's strength as an information source is also where some of its biggest challenges lie, particularly in maintaining a healthy information ecosystem for its diverse global audience.

Connecting and Discovering on the Platform

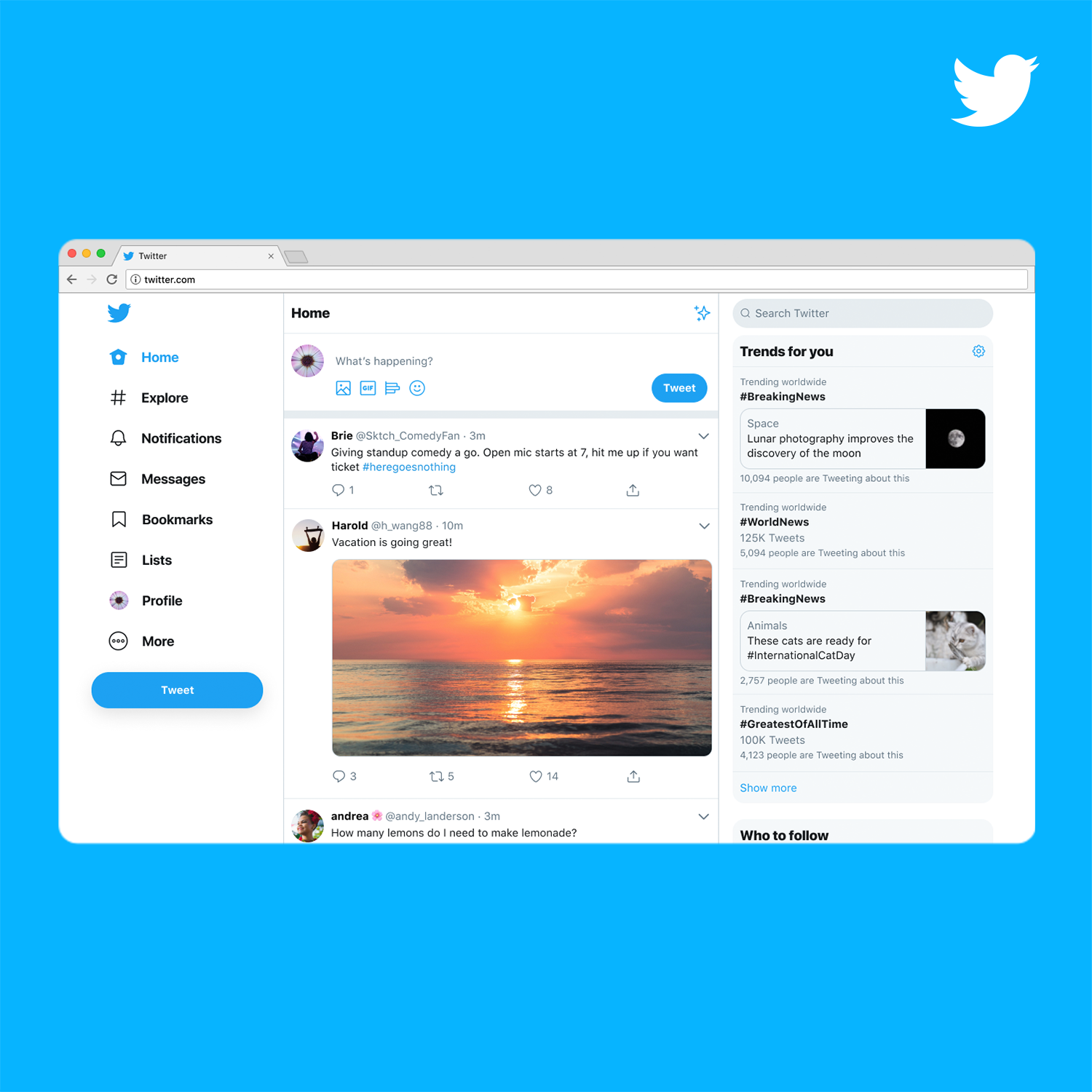

The platform, you know, is really built around the idea of connecting people and helping them find things that interest them. You "sign in to twitter to check notifications, join conversations, and catch up on tweets from people you follow." This is, basically, the everyday rhythm for many users. It's about being part of ongoing discussions and seeing what the people you care about are sharing. You also "join conversations, follow interests, and connect with others on twitter's home page," which emphasizes the social aspect and the discovery of new ideas and communities. This ease of connection is, arguably, what makes the platform so compelling for so many. It allows for a very fluid exchange of thoughts and perspectives, which can be incredibly enriching. However, this very openness, too, means that the platform needs to have good systems in place for managing all this interaction. For example, if there's a specific start date mentioned, like "Start date Jan 13, 2025," this might refer to a future policy change or a new feature that aims to improve how people connect or how content is managed. It suggests that the platform is always, more or less, evolving its approach to user interaction and content flow. The core purpose, though, remains centered on enabling people to stay connected, share their updates, and explore the vast array of topics and discussions available. This continuous flow of information and interaction is what keeps the platform vibrant and relevant for its global community.

Technical Aspects and "Twitter Hitler" Content Display

Behind the scenes, you know, there are many technical things that make the platform work, and these can sometimes affect what you see or how you experience content. For example, we hear about situations where "anyone's x feeds still down from the hack?" This points to the very real possibility of technical disruptions or security issues that can temporarily prevent people from accessing their content. It's a reminder that even the most robust digital systems can face challenges. Then there's the matter of how images are presented, with "these pics that most sites use are from a few formats," which means the platform needs to handle various digital image types to ensure they look good for everyone. Sometimes, you might even encounter a message like "We would like to show you a description here but the site won't allow us," which can indicate a technical block or a content restriction that prevents certain information from being displayed. These technical elements are, basically, the backbone of the platform, and they need to function smoothly for users to have a good experience. When there are discussions or content that might bring up "twitter hitler" related concerns, the technical systems also play a role in how that content is moderated, filtered, or even prevented from being seen. It's not just about the rules, but also about the underlying technology that enforces those rules and ensures the platform remains accessible and functional. So, in some respects, the unseen technical workings are just as important as the visible content in shaping the overall user experience.

Introducing a new Twitter.com

Twitter to Develop a Decentralized Social Media Platform

Twitter Turns 17: A Look Back at the Evolution of the Social Media Platform